This site relies heavily on Javascript. You should enable it if you want the full experience. Learn more.

How to transfer from DX11 to EX9?

anonymous user login

Shoutbox

~1yr ago

joreg:

END OF SHOUTBOX!

As this page has is now legacy, it will no longer feature new content. For latest news, see: http://vvvv.org

~1yr ago

joreg:

vvvvTv S0204 is out: Custom Widgets with Dear ImGui: https://youtube.com/live/nrXfpn5V9h0

~1yr ago

joreg:

New user registration is currently disabled as we're moving to a new login provider: https://visualprogramming.net/blog/2024/reclaiming-vvvv.org/

~1yr ago

joreg:

vvvvTv S02E03 is out: Logging: https://youtube.com/live/OpUrJjTXBxM

~1yr ago

joreg:

Follow yar on his Advent of Code: https://discourse.vvvv.org/t/advent-of-code-2024/23772

~1yr ago

joreg:

Follow TobyK on his Advent of Code: https://www.twitch.tv/tobyklight

~1yr ago

joreg:

vvvvTv S02E02 is out: Saving & Loading UI State: https://www.youtube.com/live/GJQGVxA1pIQ

~1yr ago

joreg:

We now have a presence on LinkedIn: https://www.linkedin.com/company/vvvv-group

~1yr ago

joreg:

vvvvTv S02E01 is out: Buttons & Sliders with Dear ImGui: https://www.youtube.com/live/PuuTilbqd9w

~1yr ago

joreg:

vvvvTv S02E00 is out: Sensors & Servos with Arduino: https://visualprogramming.net/blog/2024/vvvvtv-is-back-with-season-2/

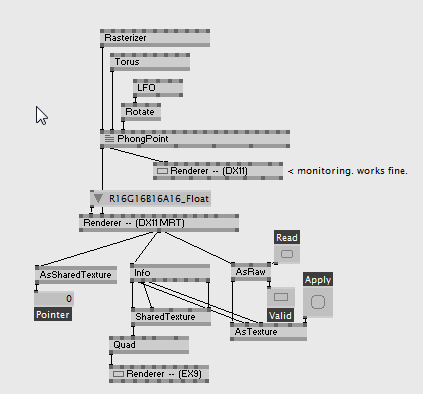

MRT is tricky when you share it, proper temptarget is more stable for sharing however as i can remember there's a DX11ToDX9 module coming with dx11.

also why do you need the result in dx9? what's missing in dx11? (except some old stuff like AsVideo)

actually i know a scenario when you need to have frame synchronous contour detection with freeframe from a kinect2, I've created a x64 vvvv instance for kinect shared with dx11 and received the thing in dx9 in an other x86 vvvv for the freeframe contour, it was hacky as hell but the alternative with OpenCV was actually slower.

also need to point out couple of things: on dx9 formats are not called the same the dx11 R16G16B16A16_Float is A16R16G16B16F in dx9, shared handle is != texture pointer, you need to use the former. To make texture sharing work the sharable texture should end up in a renderer output otherwise it is not considered used hence it's not created. On dx9 SharedTexture node usage should be set to rendertarget.

Thanks, microdee.

I need to share via DX11 since DX9 fails to let the texture travel between adapters.

The rest of my setup is DX9, hence the desire to cast down.

In DX9, that was really easy using Writer (EX9.Geometry XFile) and Xfile (EX9.Geometry Load). Currently the objects are mapped using a pimped Pointeditor (3D Persistent Sandwich) and flushed into .x files.

I'm thinking of porting the whole project to DX11; right now i'm gonna learn about saving mapped DX11 geometry to disk. But i can't find corresponding geometry writing node.

the reason you can't find it is that .x is gone. in dx11 you have to save your geometry with readback and your own method. because in dx11 geometry is only a large buffer of bytes on the gpu and bunch of metadata which tells assemblers and shaders how to deal with them but you can customize that metadata without any constraints. this allows you to do way more stuffs than the dx9 meshes, like a quad based system instead of triangles. or triangles with adjacency information.